Reflections on My First Beginner Level Coding Project (13F Watchlist Automation) with ChatGPT

It’s no wonder that software is good at writing, understanding, and explaining software.

I’ve dabbled very lightly in introductory courses on Python. For a while, the jump to actually completing a project felt like one that I wasn’t ready to take, especially not without a mentor and someone guiding me through the steps. But ChatGPT changes that. There’s certainly nothing like having someone right by your side and who really understands what you are trying to do, but having ChatGPT as a resource is a lot closer to that than not having anyone at all.

The project that I worked on was basically the automation of a 13F Watchlist like the one pictured below from @greenchipcap.

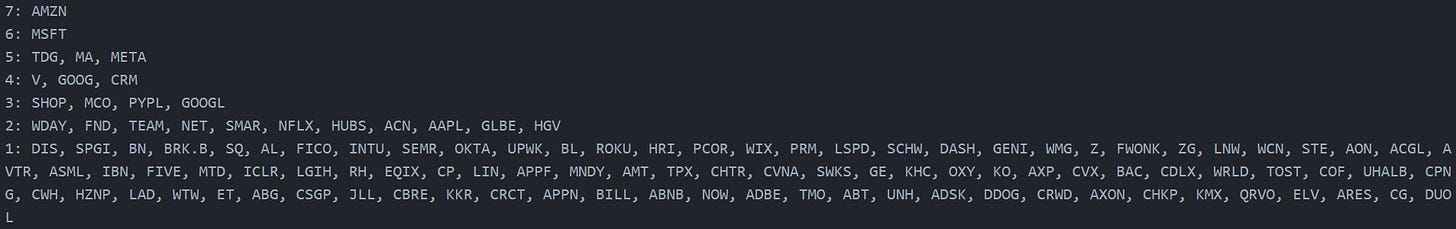

Basically, I wrote two scripts. The first does a series of API calls from the WhaleWisdom database, retrieving all holdings > 2.5% of the current portfolio from a list of funds that I follow and respect. The output of this ends up in a CSV file and is essentially a column of a couple hundred tickers. I then wrote another script where this information is put into an output like the one below, showing how often a ticker shows up among all those funds’ portfolios:

To an experienced coder, this probably doesn’t seem like much. But I really wouldn’t have had much of an idea of where to start without ChatGPT. I simply threw a prompt in there asking it to guide me through this process step by step. I’d follow up with other prompts, asking it to write scripts for me, explain in more detail a certain step, etc. I used the Atom Editor as my environment.

You could almost sum it up as being an exercise in prompt engineering and trying to get ChatGPT to write code effectively. There were LOTS of iterations of scripts that did not work. It was interesting to see ChatGPT repeatedly make some of the same mistakes in presenting its scripts, just needing a simple reminder of the previous fixes it had already made. I think that was one of the things that stood out to me - it seemed to have a short memory. I’d often ask for it to add a feature to the existing script and present it anew, and it would end up breaking by some part that was unrelated to the new feature. Rather than digging through and try to find this myself, a common type of prompt was, “The code you just submitted provided error message XYZ. This is the code you wrote earlier that worked: [functional code]… how are these two scripts different? Please change the script you submitted to better match the principles and structure of the previous script, and explain what changes you made and why.” Using this sort of an approach, you can really get as granular and complex as you want, or zoom out and ask it to explain very basic and simple concepts to you. It’s no wonder that software is good at writing, understanding, and explaining software.

On the one hand, I think there was an initial feeling of discouragement for me, just in how long it took to write a pretty simple program and the fact that I wasn’t really even writing it myself. But upon further reflection, I think it’s just that I am learning my own style and method of writing code that works for me and my situation given the restraints (experience, time, etc.) that I have. Who knows how long it would have taken manually. And as I get more reps at doing this sort of thing, hopefully I will be able to more effectively prompt ChatGPT and maybe even spot some of the errors myself. I strongly suspect that as the project exists today, there is a lot that could be improved. A couple examples include an easier/more user friendly way of selecting the funds to include so that it isn’t just a bunch of numbers. Perhaps creating some sort of list or linking to a sheet somewhere so that it is easier to more quickly see what fund matches up with ID “523005” in the database. Or printing the list of included funds with the output. I also suspect that there is a way to combine the two scripts (the one that pulls the holdings, and the other that sorts them). These will be next steps in trying to clean it up. I also think another effective next step could be asking ChatGPT to take me through the code line-by-line and explaining what each part of the code does, so I understand exactly how each of them work. This will lead to a better understanding of the code for myself, as well as perhaps even the chance that as ChatGPT walks me through this, it realizes some portion of it may be redundant, for example, or could have been better written, if I ask it such questions.

I’ll also continue to chip away at courses on e.g. DataCamp so that I can more easily read and understand what ChatGPT presents to me, or at least it has a bit more familiarity.

Sort of relating to the issue I discussed earlier about the apparently short memory, another possible learning from this exercise is that it might make sense to only feed ChatGPT one incremental step at a time. This makes it easier to pinpoint what changed in a script, what might have gone wrong, etc. Otherwise both me and ChatGPT seemed to often get a bit confused if we tried to do too much at once. For example, a next step that I might consider is to add additional features that can help me determine the materiality of each listed holding for my purposes (current position size, increase in position size, low price during the quarter, current price, etc.). Rather than asking ChatGPT to do all this at once, it might be better to just ask it to add one column at a time with one datapoint per holding of a certain fund. And then once all the columns are built out for that fund, try adding another one, etc.

A final tactic of note - if nothing seems to be working and all else fails, try just throwing GPT some of the documentation. Many prompts were something along the lines of, “here is some of the documentation… does this change anything about how you want to construct the script?” So try to throw things at it that you know will work, and it tends to do pretty decently at learning from functional code and applying it to your specific use case.